Case Study - Adobe

A 10 year overnight sucess

It was raining outside the conference room window in San Francisco as our team finished preparing for our next pivot / persevere / kill decision.

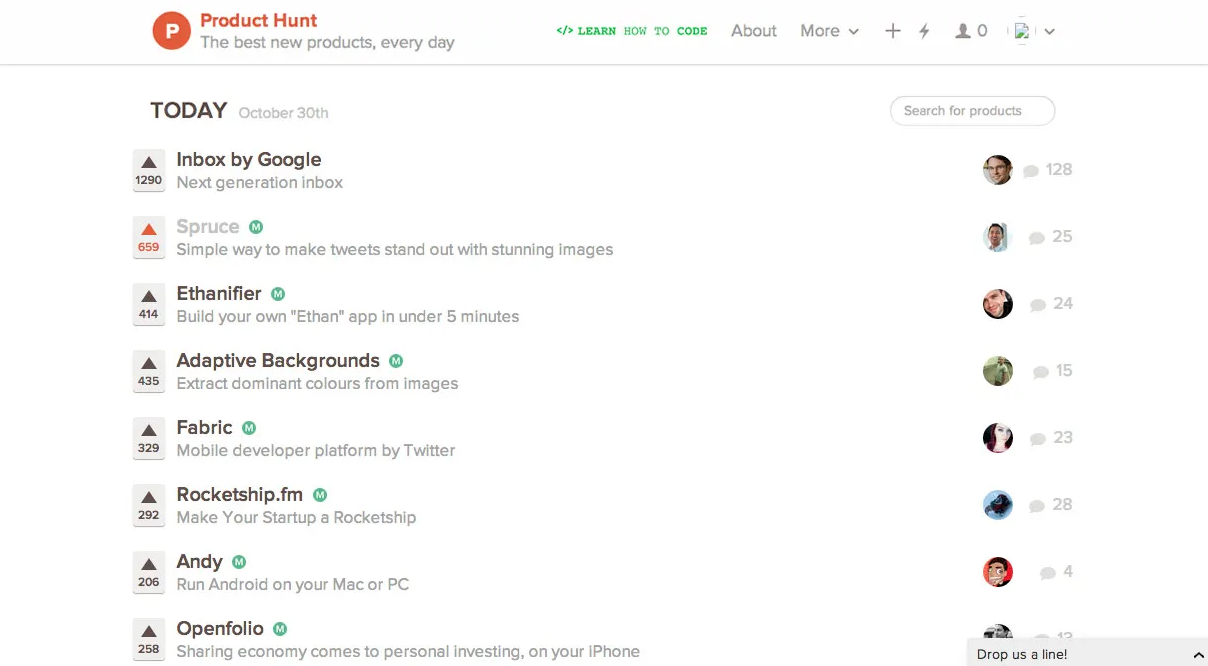

The product in question was Spruce, the off brand experiment created in collaboration between my team and Adobe. Spruce had generated a bit of buzz , especially since being featured on Product Hunt. (Spruce had topped Product Hunt until Inbox from Google came out that day as well)

After being featured on Product Hunt, Spruce was quickly written about in Huffington Post and various other social media / tech blogs.

While all of the recognition was very positive in tone, everyone writing about Spruce had assumed it was from a new startup in San Francisco.

Little did they know it was an off-brand, corporate Minimum Viable Product (MVP) we had built to test the market.

Our goal is to test the market with a Minimum Viable Product and generate qualitative & quantitative data to make an investment decision on whether not we should continue.

In the case of Spruce, it all started with an internal shark tank at Adobe.

A small group of product managers pitched their ideas to us, the reward being a dedicated cross functional team to help test their vision against reality. All of the pitches were interesting, however we chose the idea from Tom Nguyen.

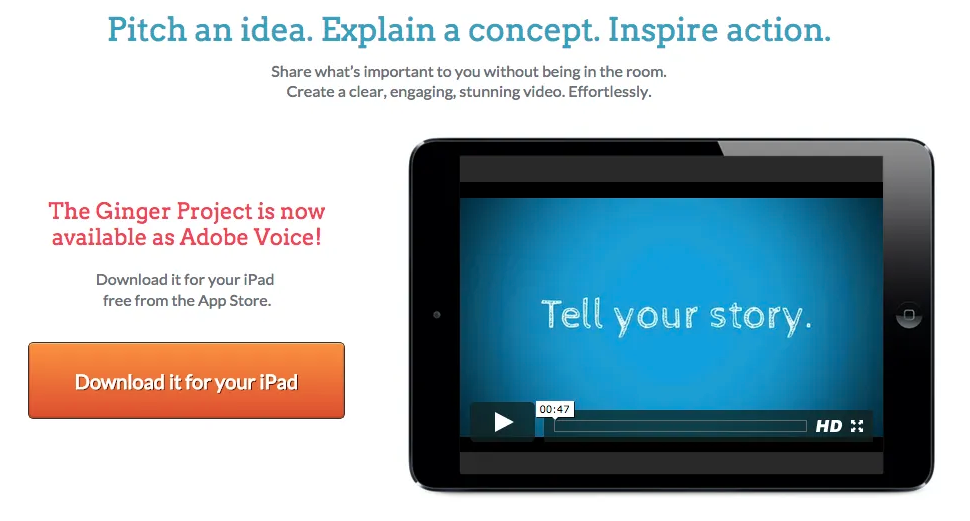

Tom helped created the magic behind Adobe Voice, a storytelling tool that Apple named one of the Best Apps of the Year. Not coincidentally: he also started Voice as an off brand experiment.

Inception

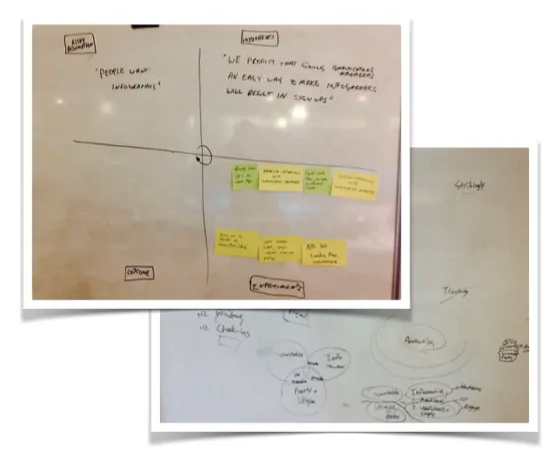

After the shark tank was concluded, we began with an inception.

The team deep dived into our vision, goal, strategy, customer segments, market competition, hypotheses and experiments.

Pieces of these exercises that we use are pulled from Lean Startup, Design Thinking and Agile Software Development.

Near the end of the inception we came up with our startup name and branding, Spruce.

“The good news is that startup brands are so bad, you shouldn’t spend more than half a day coming up with one for your off brand MVP.”

Far too much time is spent in pointless meetings debating brand and color when you could have just enough brand to test the market instead. Because this is off brand, we didn’t need countless hours of meetings and approvals with corporate.

The last part of our inception was the team working agreement.

This is important because if you start sprinting without understanding how you’ll work together, it can all fall apart very rapidly. In the team working agreement, we have a conversation about our daily standups, retrospectives, planning meetings and pivot/persevere/kill meetings. We agreed on what tools we’ll use and most importantly, our team and personal goals. For example, each of us may have a personal goal that aligns to the team goal, so we found it valuable to speak openly about these from the beginning and clear the air.

Customer Interviews

Our next step following the inception, was to find Communication Managers and interview them.

We found our them through a combination of our personal network, interviewee referrals, Twitter and local meetups in the San Francisco Bay Area.

The interview process can get muddy, especially if you are pitching your solution from the very beginning.

“Most of the customer interviews I observe are solution monologues.”

Instead of jumping right into the solution, we performed 15–20 problem interviews simply to understand the problem space in more detail.

We did these interviews in pairs, with one person conducting the interview and the other person taking notes. This was important because when done solo, rarely can you ask the question, actively listen, take good notes and have the next question lined up. After each interview we’d do a quick debrief and cover what went well and if there was anything we’d like to change.

In learning more about the problems of Communication Managers, it became clear that it is more and more difficult to stand out in the world of social media, specifically Twitter. We began to understand which Communication Managers felt the pain enough to actively seek a solution and what job people would hire Spruce for, if we were to build it.

Once we had agreed that from a qualitative perspective the problem existed and was painful enough, we narrowed our focus on creating Twitter sized images for social media.

The team then crafted our guiding principles:

Simple, quick, visually appealing images.

Acquisition Tests

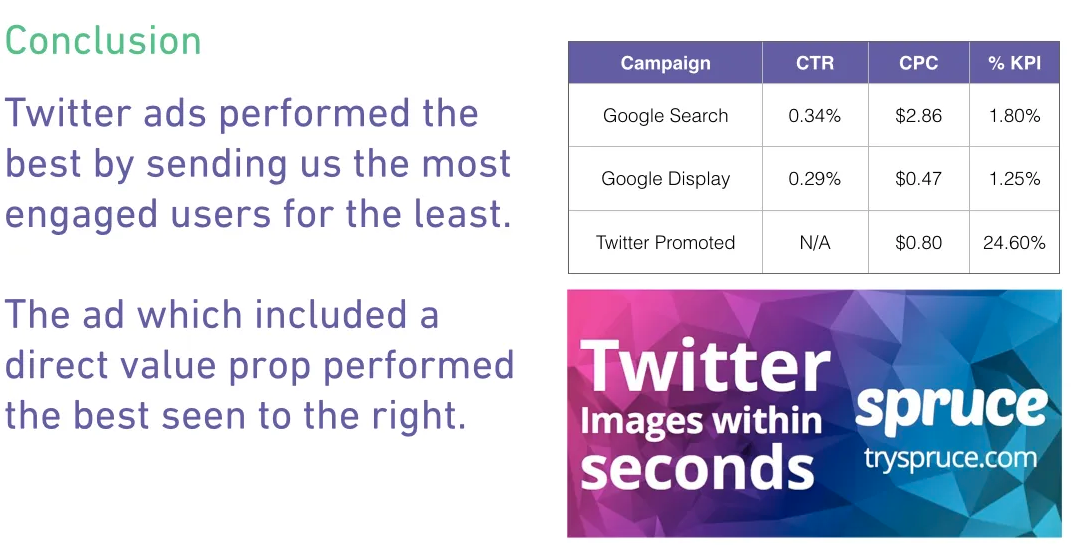

After our interviews, we wanted to test our value proposition at a larger scale, so we began to spend $100/day on Google Display, Google Search and Twitter Ads.

Due to the nature of Spruce, we realized quickly that Twitter was the clear winner.

Once we began to effectively acquire target customers, we wanted to further test our value proposition and learn about patterns of input.

The Concierge Test

We needed to learn about the types of images our customers would request, beyond just asking them. What people say and what people do are often different, so it is important to balance interviews with action.

However we also didn’t want to start writing software just yet, as our uncertainty was still very high. We decided to create a Concierge Test.

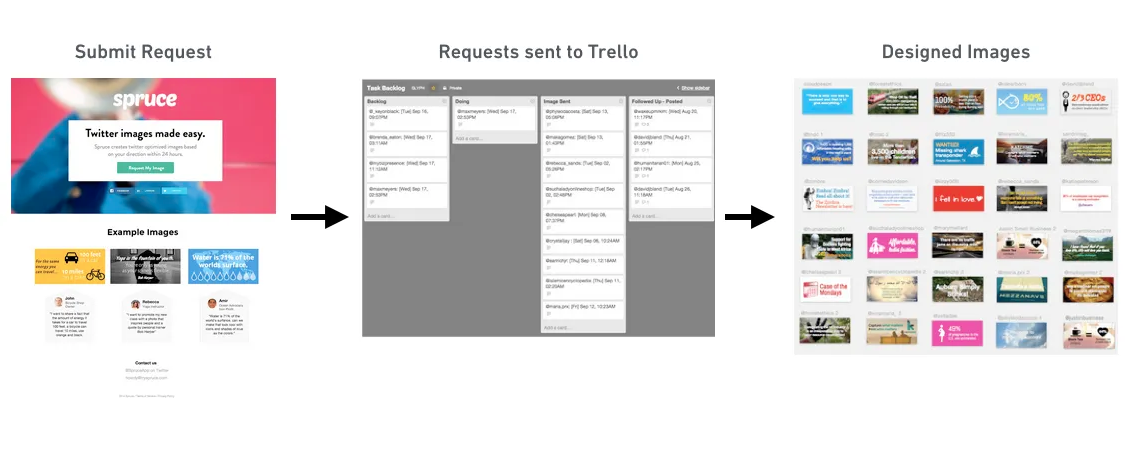

This included a Google Form on our landing page that would allow customers to submit an image request. The Google Form would fire off an email which in turn created a Trello card. Our designer would then pull the card into the In Progress column, create the image and send it back to the customer.

The flow looked like this:

We knew this wouldn’t scale and we accepted the fact that we may churn through a few early adopters of the service in the process.

Quickly we learned that over 50% of the requests were for text over image.

Much to our dismay, people weren’t sharing the images we created for them on Twitter, even though they said that they would.

In our follow up interviews, people stated that the image didn’t match their expectations and that the turnaround was too long.

“The challenge with a Minimum Viable Product is that you decide what’s Minimum, but the customer determines if it’s Viable.”

Needless to say we were a bit demoralized, but came together as a team and discussed what we’d do next, by coming up with a new series of hypotheses.

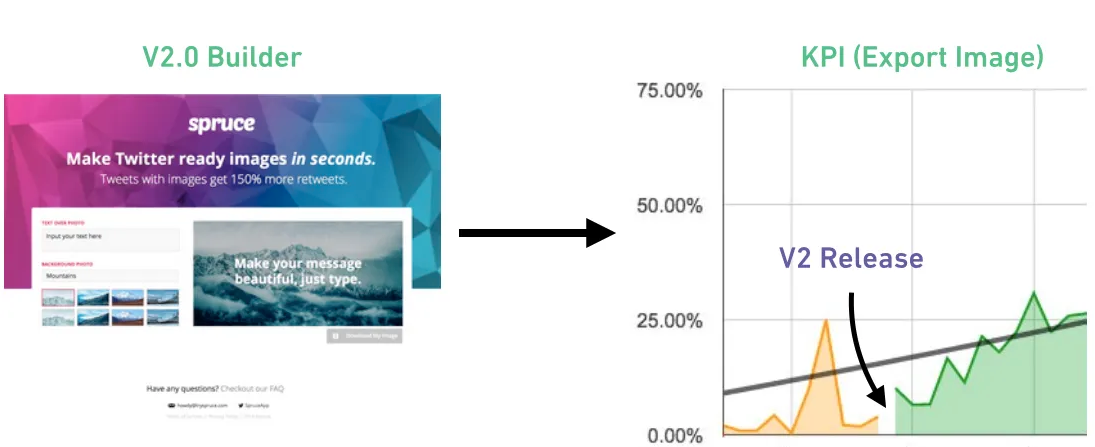

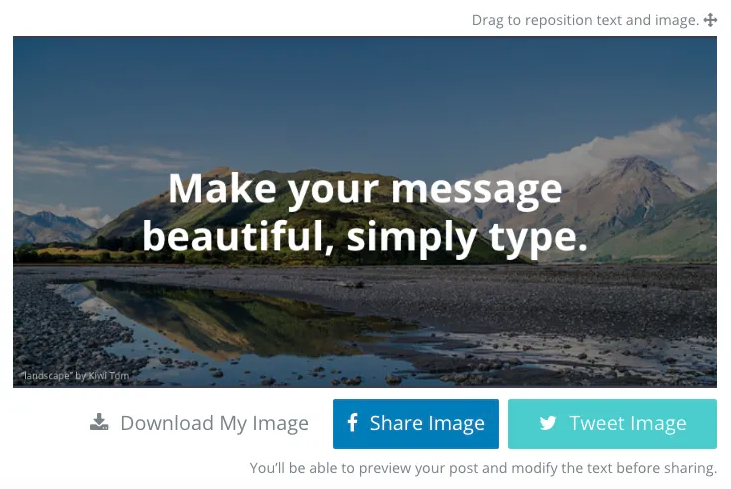

Our decision was to build a minimum web app version of the process that addressed both the gap in image expectations and the slow turnaround time.

Iterating on Spruce

Once the web app version of Spruce was live, we immediately saw an increase in our acquisition and activation metrics. The team’s morale improved dramatically. Since we had curated a list of early adopters by this point, it gave us an opportunity to engage people over social media and our email list.

The Spruce KPI we were most concerned about at the time was a customer using the “download image” button. If customers weren’t downloading the image they had just created, then there was little chance they’d share it on Twitter.

Once we observed people downloading the images, we then had to find a way to measure if they were sharing the images to Twitter. The team realized our next iteration was to have a “Tweet Image” button, so that the friction of downloading and then uploading was removed.

In addition to talking to customers, we continuously looked at the data in services like Mixpanel and LuckyOrange.

For example, in reviewing our LuckyOrange data we noticed that customers were not downloading and sharing, but were moving around the text, image search and positioning functionality over and over again.

After reviewing this as a team, we had a hypothesis that our search results were not sufficient yet to allow people to find what they wanted right away.

Sure enough, after we improved search results we observed an uptick in our image sharing and improvements in the LuckyOrange data.

Revisiting Our Core Principles

As I mentioned before, our core principles for Spruce were around simple, quick, and visually appealing images.

We could have easily just created a feature backlog and started burning it down, but instead we checked each feature against our principles. The team also created hypotheses behind each feature, to focus on what we wanted to learn.

Now we had people creating and sharing images, but we found it difficult to find confidence in the sharing loop. We decided to add Twitter Authentication to Spruce in that it would help us close the loop and give the customer a faster sharing experience.

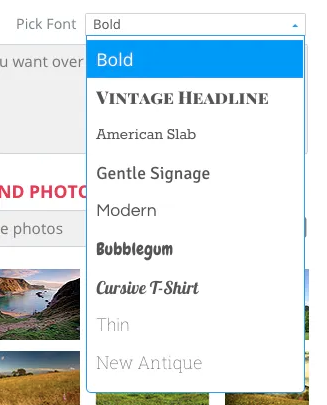

Next we had seen a drop off in our cohorts after 1–2 images were created. At this point in time we still only supported one font. The feedback we heard was that it wasn’t original and customizable enough to use more than a few times.

We in turn, added a few fonts to measure if people would in fact, create more images over time.

We then tested other platforms, to understand if people would use Spruce for Facebook.

This style of rapid iteration backed by hypotheses, qualitative and quantitive data over the course of 12 weeks led us to:

7000+ Created Images

1000+ Total Users

400+ Newsletter Signups

150+ Weekly Active Users

Pivot, Persevere or Kill?

As you may have guessed, the team decided to persevere given the amount of traction created without the Adobe brand in as little as 12 weeks of experimentation.

Fast forward

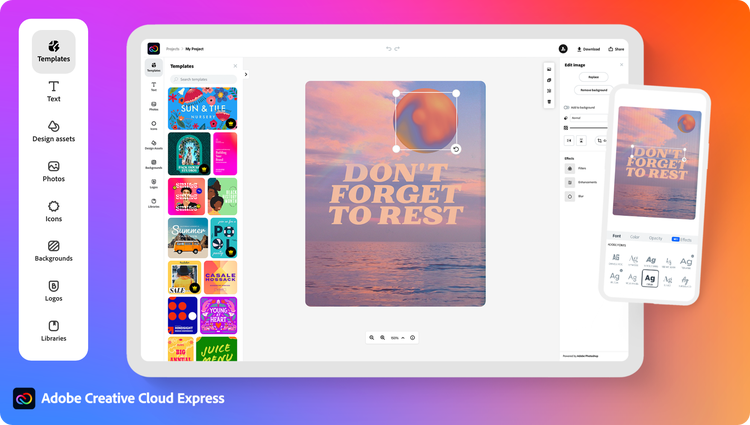

With countless iterations of customer focused testing, both Adobe Spruce and Adobe Voice have evolved over the years into one of the hottest apps on the internet, Adobe Express.

The journey from an idea to a successful product can be a long and winding one.

This goes to show you with some rigorous testing and core principles to guide you, anything is possible.

Turn This Experiment Into Your Next Breakthrough

Adobe didn’t iterate by guessing, they ran focused tests that built clarity and confidence. What experiment could unlock momentum for you?